jBPM engine itself does not require to have knowledge about users and groups but to build more complete platform based on it sooner or later users and their memberships will be needed. Prior to version 5.3, jBPM relied on basic (and demo only) setup based on some property files. With 5.3 you can make use of integration that allow to employ existing LDAP server.

So, let's start digging into it :)

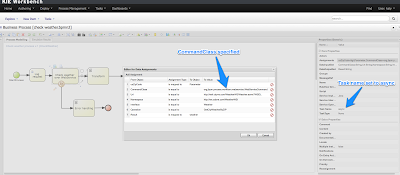

User and group information are relevant to two components:

Human task server has bit more to do with users and groups. First of all it requires it when assigning task to entities (either user or group). Next, in case notification mechanism is configured it requires to fetch more information about the entity (such as email address, etc.)

Bit of heads up is always welcome, but how this can be configured and used? Firstly, we need to have a LDAP server that could be used as user repository. If you have already one this step can be omitted.

Install and configure LDAP server of your choice, I personally use OpenLDAP that can be downloaded and used freely for evaluation purpose. Regardless of your choice most likely the best guide on installation and configuration is to be found on the server home page.

Note: make sure that when configuring your LDAP server inetOrgPerson schema is included otherwise import of example ldif will fail.

Once the server is up and running it's time to load it with some information that will be used later on by jBPM. Following is a sample setup of users and groups that matches the one used in previous versions of jBPM5 (Example ldif file expect that there is already domain configured (dc=jbpm,dc=org)):

Sample LDIF file

Rest of the post assumes that LDAP server is installed on the same machine as application server that hosts jbpm (but it is not limited to that).

Next step is to configure application server that host jbpm console to authenticate users using LDAP instead of the default property file based security domain. In fact there are no changes needed to jbpm console itself but only to JBoss AS7 configuration.

To use LDAP, jbpm-console security domain inside standalone.xml file should be replaced with:

<security-domain name="jbpm-console">

<authentication>

<login-module code="org.jboss.security.auth.spi.LdapExtLoginModule" flag="required">

<module-option name="baseCtxDN" value="ou=People,dc=jbpm,dc=org"/>

<module-option name="baseFilter" value="(uid={0})"/>

<module-option name="rolesCtxDN" value="ou=Roles,dc=jbpm,dc=org"/>

<module-option name="roleFilter" value="(member=uid={0},ou=People,dc=jbpm,dc=org)"/>

<module-option name="roleAttributeID" value="cn"/>

<module-option name="allowEmptyPasswords" value="true"/>

</login-module>

</authentication>

</security-domain>

This is pure JBoss configuration so in case of more advanced setup is required please visit JBoss AS7 documentation.

Note that from jBPM 5.3 jbpm console is capable of using any security domain that JBoss AS supports with just configuring it properly on application server so it is not limited to LDAP.

Finally it is time to look into the details of human task server configuration to make it use of LDAP as user repository. Those that are following jBPM development are already familiar with UserGroupCallback interface that is responsible for providing user and group/role information to the task server. So naturally LDAP integration is done through implementation of that interface.

Similar to configuring application server, ldap callback needs to be configured (with property file for instance). Here is a sample file that corresponds to the configuration used throughout the post:

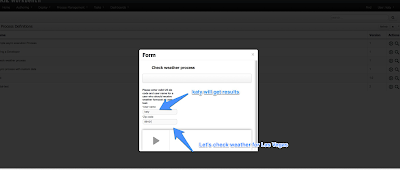

As from 5.3 by default human task server is deployed as web application on Jboss AS7. With this, user can simply adjust configuration of the human task server by editing its web.xml file. And this is how LDAP callback is registered.

Next put the jbpm.usergroup.callback.properties on the root of the classpath inside jbpm-human-task.war web application and your LDAP callback will be ready to rock!

In addition, when using deadlines on your tasks together with notification, there is one more step to configure so user information (for instance email address) could be retrieved from LDAP server. UserInfo interface is dedicated to providing this sort of information to the deadline handler and thus it's implementation needs to registered as well.

Similar as user group callback was registered it can be done via web.xml of human task web application:

It requires to be configured as well and it could be done via property file that should be named jbpm.user.info.properties and be placed on root of the class path.

As it shares most of the properties with callback configuration, in many cases users could use single file that contains all required values and instruct both implementation where to find this file with system properties:

With all that jBPM will now utilize your LDAP server for users and groups information whenever it needs them.

P.S.

This post is more of introduction to the LDAP integration rather than complete and comprehensive guide as it touches on high level all the components involved. More detailed information about configuring particular pieces can be found in jBPM documentation for 5.3 release.

Any comments are more than welcome.

So, let's start digging into it :)

User and group information are relevant to two components:

- jbpm console

- human task server

Human task server has bit more to do with users and groups. First of all it requires it when assigning task to entities (either user or group). Next, in case notification mechanism is configured it requires to fetch more information about the entity (such as email address, etc.)

Bit of heads up is always welcome, but how this can be configured and used? Firstly, we need to have a LDAP server that could be used as user repository. If you have already one this step can be omitted.

Install and configure LDAP server

Install and configure LDAP server of your choice, I personally use OpenLDAP that can be downloaded and used freely for evaluation purpose. Regardless of your choice most likely the best guide on installation and configuration is to be found on the server home page.

Note: make sure that when configuring your LDAP server inetOrgPerson schema is included otherwise import of example ldif will fail.

Once the server is up and running it's time to load it with some information that will be used later on by jBPM. Following is a sample setup of users and groups that matches the one used in previous versions of jBPM5 (Example ldif file expect that there is already domain configured (dc=jbpm,dc=org)):

Sample LDIF file

Rest of the post assumes that LDAP server is installed on the same machine as application server that hosts jbpm (but it is not limited to that).

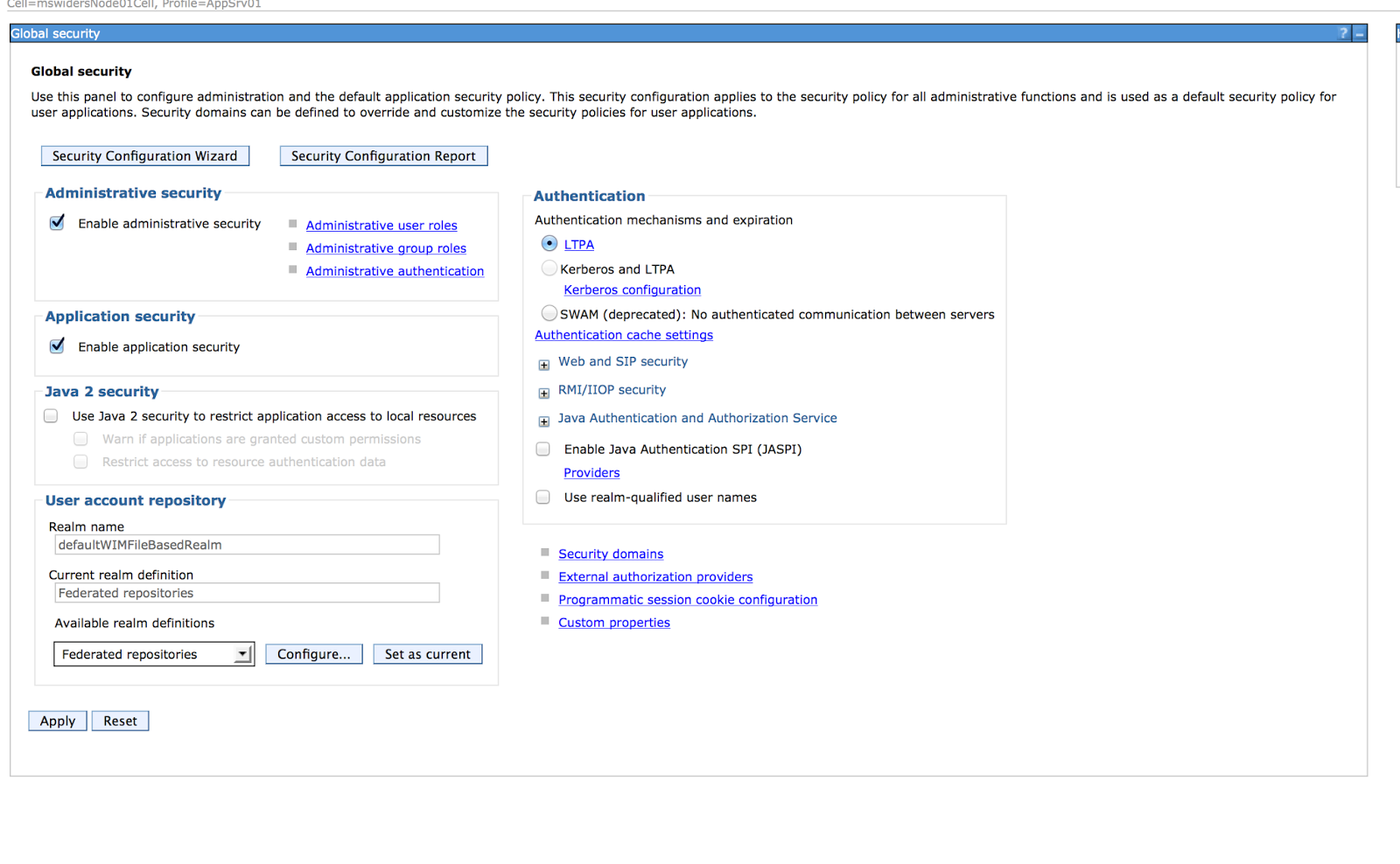

Configure JBoss AS7 security domain to use LDAP

Next step is to configure application server that host jbpm console to authenticate users using LDAP instead of the default property file based security domain. In fact there are no changes needed to jbpm console itself but only to JBoss AS7 configuration.

To use LDAP, jbpm-console security domain inside standalone.xml file should be replaced with:

<security-domain name="jbpm-console">

<authentication>

<login-module code="org.jboss.security.auth.spi.LdapExtLoginModule" flag="required">

<module-option name="baseCtxDN" value="ou=People,dc=jbpm,dc=org"/>

<module-option name="baseFilter" value="(uid={0})"/>

<module-option name="rolesCtxDN" value="ou=Roles,dc=jbpm,dc=org"/>

<module-option name="roleFilter" value="(member=uid={0},ou=People,dc=jbpm,dc=org)"/>

<module-option name="roleAttributeID" value="cn"/>

<module-option name="allowEmptyPasswords" value="true"/>

</login-module>

</authentication>

</security-domain>

This is pure JBoss configuration so in case of more advanced setup is required please visit JBoss AS7 documentation.

Note that from jBPM 5.3 jbpm console is capable of using any security domain that JBoss AS supports with just configuring it properly on application server so it is not limited to LDAP.

Configure Human task server to use LDAP

Finally it is time to look into the details of human task server configuration to make it use of LDAP as user repository. Those that are following jBPM development are already familiar with UserGroupCallback interface that is responsible for providing user and group/role information to the task server. So naturally LDAP integration is done through implementation of that interface.

Similar to configuring application server, ldap callback needs to be configured (with property file for instance). Here is a sample file that corresponds to the configuration used throughout the post:

ldap.user.ctx=ou\=People,dc\=jbpm,dc\=org

ldap.role.ctx=ou\=Roles,dc\=jbpm,dc\=org

ldap.user.roles.ctx=ou\=Roles,dc\=jbpm,dc\=org

ldap.user.filter=(uid\={0})

ldap.role.filter=(cn\={0})

ldap.user.roles.filter=(member\={0})

ldap.role.ctx=ou\=Roles,dc\=jbpm,dc\=org

ldap.user.roles.ctx=ou\=Roles,dc\=jbpm,dc\=org

ldap.user.filter=(uid\={0})

ldap.role.filter=(cn\={0})

ldap.user.roles.filter=(member\={0})

As from 5.3 by default human task server is deployed as web application on Jboss AS7. With this, user can simply adjust configuration of the human task server by editing its web.xml file. And this is how LDAP callback is registered.

<init-param>

<param-name>user.group.callback.class</param-name>

<param-value>org.jbpm.task.service.LDAPUserGroupCallbackImpl</param-value>

</init-param><param-name>user.group.callback.class</param-name>

<param-value>org.jbpm.task.service.LDAPUserGroupCallbackImpl</param-value>

Next put the jbpm.usergroup.callback.properties on the root of the classpath inside jbpm-human-task.war web application and your LDAP callback will be ready to rock!

In addition, when using deadlines on your tasks together with notification, there is one more step to configure so user information (for instance email address) could be retrieved from LDAP server. UserInfo interface is dedicated to providing this sort of information to the deadline handler and thus it's implementation needs to registered as well.

Similar as user group callback was registered it can be done via web.xml of human task web application:

<init-param>

<param-name>user.info.class</param-name>

<param-value>org.jbpm.task.service.LDAPUserInfoImpl</param-value>

<param-name>user.info.class</param-name>

<param-value>org.jbpm.task.service.LDAPUserInfoImpl</param-value>

</init-param>

It requires to be configured as well and it could be done via property file that should be named jbpm.user.info.properties and be placed on root of the class path.

As it shares most of the properties with callback configuration, in many cases users could use single file that contains all required values and instruct both implementation where to find this file with system properties:

-Djbpm.user.info.properties=classpath-location-and-file-name

-Djbpm.usergroup.callback.properties=classpath-location-and-file-name

With all that jBPM will now utilize your LDAP server for users and groups information whenever it needs them.

P.S.

This post is more of introduction to the LDAP integration rather than complete and comprehensive guide as it touches on high level all the components involved. More detailed information about configuring particular pieces can be found in jBPM documentation for 5.3 release.

Any comments are more than welcome.